Alpie Core: 4-bit Quantized Reasoning Model

TL;DR

- 32B reasoning model, trained & served at 4-bit quantization

- Competitive with GPT-4o / Claude 3.5 Sonnet on reasoning & coding benchmarks

- 65K context length for long-document reasoning

- Open source (Apache 2.0) - fully permissive for commercial use

- Available via Ollama, Hugging Face, and hosted API with 5M free tokens

📄 Technical Report: Alpie Core.pdf

How to Use Alpie Core

Option 1: Local Inference with Ollama (Recommended for Quick Start)

# Pull the model (20GB)

ollama pull 169pi/alpie-core

# Run inference

ollama run 169pi/alpie-core

Requirements: 20GB RAM/VRAM minimum

Option 2: Hosted Inference via 169Pi API

Get started instantly with our hosted API - no setup required!

Get your first free API key including 5 million tokens to test real workloads

- OpenAI-compatible - drop-in replacement for OpenAI SDK

- Supports streaming, async, and long-context reasoning

- Production-ready with low latency

Option 3: Programmatic Access with Python SDK

# Install the official SDK

pip install pi169

# Set your API key

export ALPIE_API_KEY="your_key_here"

# Use via CLI

pi169 "Explain quantum entanglement"

# Or use in Python

from pi169 import AlpieClient

client = AlpieClient(api_key="your_key_here")

response = client.chat.completions.create(

model="alpie-core",

messages=[{"role": "user", "content": "Solve this coding problem..."}],

stream=True

)

SDK Features: Streaming, async/await, OpenAI compatibility, type-safe interface

Option 4: Load Directly with Transformers (Advanced)

from transformers import AutoModelForCausalLM, AutoTokenizer

from peft import PeftModel, PeftConfig

import torch

# Load LoRA adapter configuration

peft_model_id = "169Pi/Alpie-Core"

config = PeftConfig.from_pretrained(peft_model_id)

# Load base model + LoRA weights

base_model = AutoModelForCausalLM.from_pretrained(

config.base_model_name_or_path,

torch_dtype=torch.float16,

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model = PeftModel.from_pretrained(base_model, peft_model_id)

# Inference

prompt = "Solve: What is the integral of x^2?"

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=1000)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Why Alpie Core?

Alpie Core is one of the first fine-tuned 4-bit reasoning models from India, and among the first worldwide at this scale. Trained on just 8 Hopper GPUs using LoRA and QLoRA 4-bit quantization with synthetic STEM-rich datasets, it proves that aggressive quantization can match and even surpass full-precision baselines.

With a dramatically reduced memory footprint, Alpie Core delivers competitive, frontier-level reasoning performance, even beating top proprietary models. It achieves:

- 81.28% on MMLU (5-shot)

- 92.75% on GSM8K (8-shot)

- 57.8% on SWE-Bench Verified (ranked #1 globally)

This demonstrates that efficient models can rival frontier systems while remaining practical for real-world deployment at scale.

Model Summary

- Base Architecture: DeepSeek-R1-Distill-Qwen-32B

- Parameters: 32 billion (quantized to 4-bit)

- Training Method: Supervised Fine-Tuning (SFT) using LoRA/QLoRA

- Quantization: 4-bit NF4 with double quantization

- Context Length: 65k tokens

- Max Output Length: 16,384 tokens

- Training Data: Synthetic (STEM, reasoning, coding) + curated data (law, Indian context, exams, multilingual)

- License: Apache 2.0

Approach

Alpie Core underwent extensive supervised fine-tuning (SFT) to strengthen reasoning, robustness, and safety. The training leveraged a diverse mixture of curated open-source datasets and proprietary synthetic data, optimized with high-quality LLM-generated responses. The fine-tuning process emphasized:

- User Understanding and Clarity – ensuring outputs are direct, interpretable, and pedagogically sound

- Security and Ethical Guidelines – filtering unsafe or harmful generations

- Limitations and Knowledge Boundaries – transparently communicating uncertainty

- Handling Complex and Sensitive Topics – balancing informativeness with responsible guardrails

- Safety and Respectful Engagement – maintaining politeness, inclusivity, and cultural sensitivity

- Confidentiality and Responsible Use – preventing leakage of private data or internal reasoning traces

This approach enables Alpie Core to deliver reliable, aligned, and context-aware responses while maintaining safety across a broad range of use cases, generalizing across global and Indian contexts.

Model Features

- Supports Streaming – Real-time token-level responses

- OpenAI-Compatible API – Seamless integration with OpenAI client libraries

- 65K Context Length – Handles very large inputs and conversations

- 16,384 Max Output Length – Enables extremely long generations

- 4-Bit Quantization – Memory-efficient and optimized for deployment

- High Throughput Inference – Powered by vLLM for efficient large-scale serving

- Low Latency Inference – Fast response times optimized for production

- Customizable Safety & Moderation – Built-in guardrails for safer outputs

- Supports Function Calling / Tool Use – Structured outputs and external API integration

- Instruction Following – Optimized for reasoning and chain-of-thought answers

- Education & Research Ready – Tailored for competitive exams, STEM reasoning, and knowledge tasks

Key Highlights

- First 4-bit Reasoning Model from India: Competitive globally with frontier models

- Benchmark Competitiveness: Outperforms or matches 70B+ models across reasoning, math, and coding

- STEM & Coding Strength: Excellent on GSM8K, MATH-500, HumanEval, SWE-Bench Verified

- Efficiency & Deployment: 16 GB VRAM footprint, runs on commodity GPUs

- Extended Context Length: 65K tokens for research papers, multi-document reasoning

- Environmental Benefits: ~298–835 kg CO₂e, 2–3× more efficient than FP16 training

- Open-Source Commitment: Released under Apache 2.0 for global use

Benchmark Results

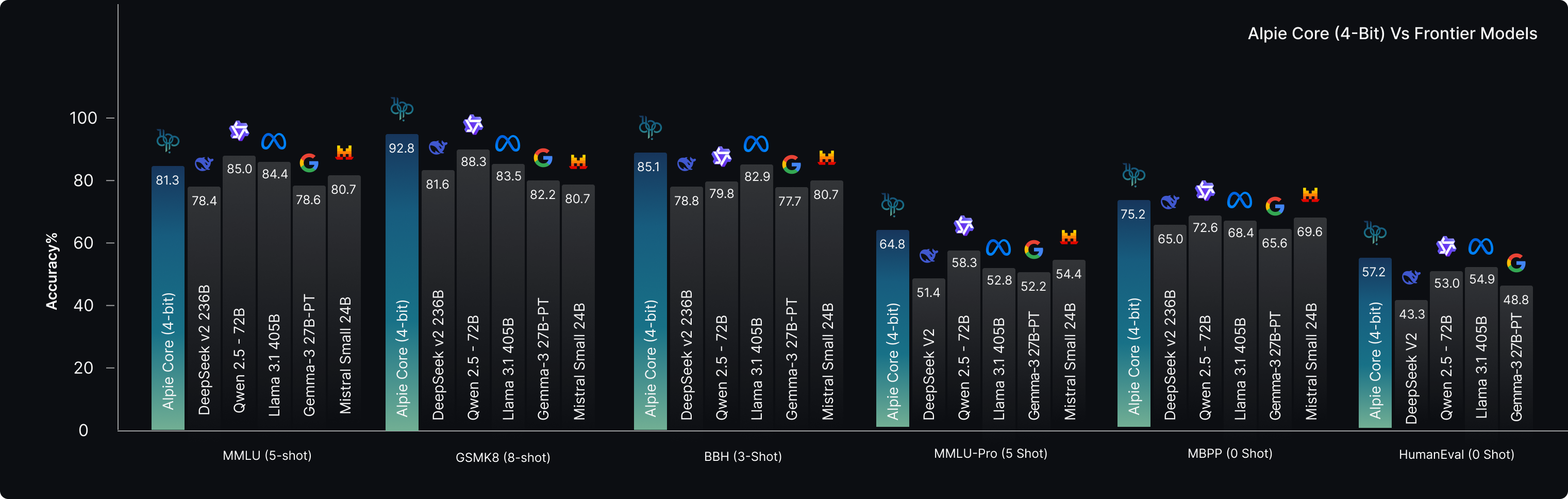

Core Benchmarks

| Benchmark | Alpie Core (32B-4bit) | DeepSeek-V2 (236B) | Qwen2.5 72B | Llama 3.1 405B | Llama 3.1 70B | Gemma-3 27B-PT | Mistral-Small-24B |

|---|---|---|---|---|---|---|---|

| MMLU (5-shot) | 81.28% | 78.4% | 85.0% | 84.4% | 79.3% | 78.6% | 80.73% |

| GSM8K (8-shot) | 92.75% | 81.6% | 88.3% | 83.5% | - | 82.2% | 80.73% |

| BBH (3-shot) | 85.12% | 78.8% | 79.8% | 82.9% | 81.6% | 77.7% | - |

| MMLU-Pro (5-shot) | 64.78% | 51.4% | 58.3% | 52.8% | 53.8% | 52.2% | 54.37% |

| MBPP (pass@1) | 75.20% | 65.0% | 72.6% | 68.4% | - | 65.6% | 69.64% |

| HumanEval (pass@1) | 57.23% | 43.3% | 53.0% | 54.9% | - | 48.8% | - |

SWE-Bench Verified Performance (#1 Globally)

| Rank | Model | Accuracy (%) | vs Alpie |

|---|---|---|---|

| 1 | Alpie Core | 57.8 | — |

| 2 | Qwen3-Coder-30B-A3B-Instruct | 51.6 | -6.2% |

| 3 | o1 | 48.9 | -8.9% |

| 4 | o3-mini (high) | 49.3 | -8.5% |

| 5 | Claude 3.5 Sonnet | 49.0 | -8.8% |

| 6 | DeepSeek R1 | 49.2 | -8.6% |

| 7 | Devstral | 46.8 | -11.0% |

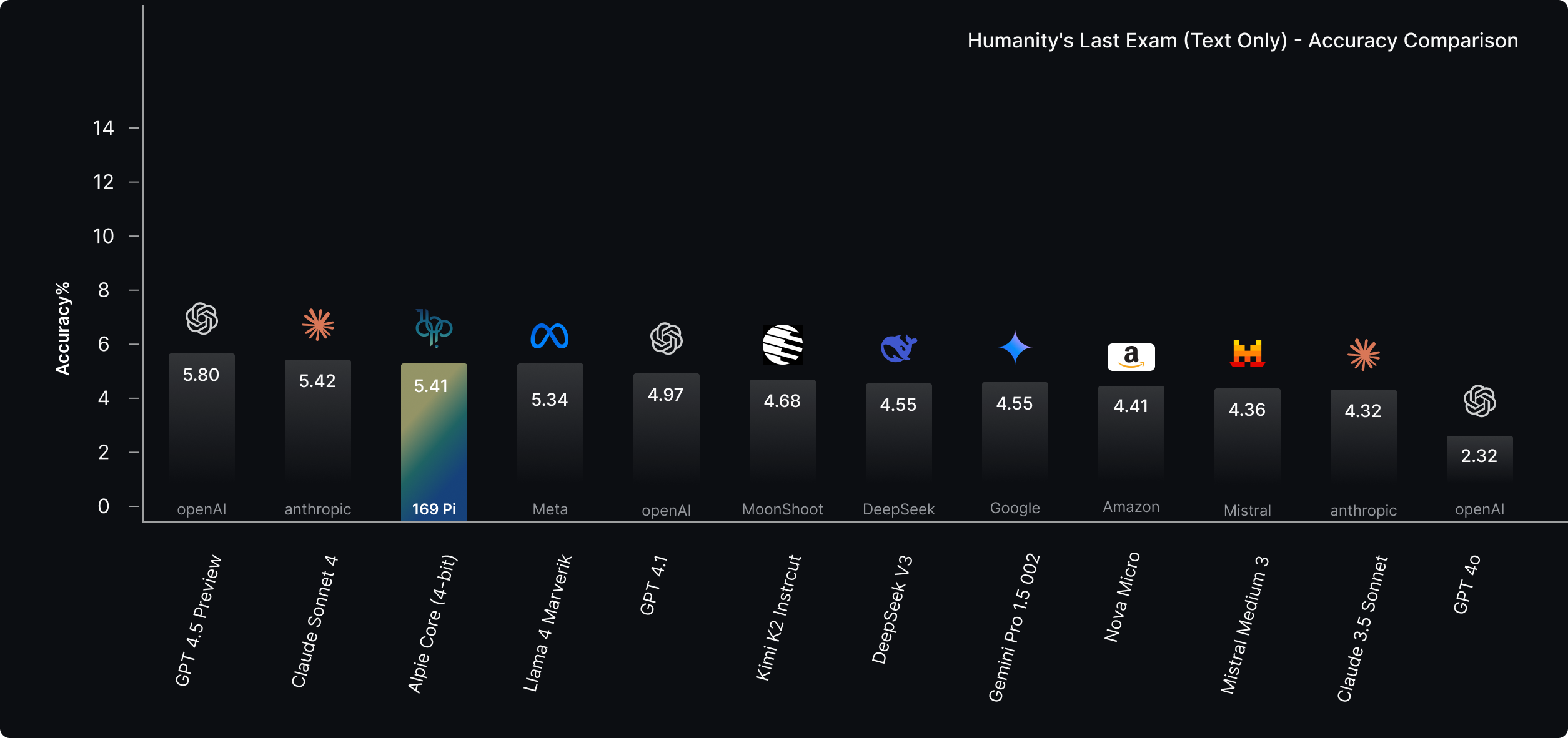

Humanity's Last Exam Leaderboard (#3 Globally)

| Rank | Model | Accuracy (%) | vs Alpie |

|---|---|---|---|

| 1 | GPT 4.5 Preview | 5.8 | +0.39% |

| 2 | Claude Sonnet 4 | 5.42 | +0.01% |

| 3 | Alpie Core 32B (4-bit) | 5.41 | — |

| 4 | Llama 4 Maverik | 5.34 | -0.07% |

| 5 | GPT 4.1 | 4.97 | -0.44% |

| 6 | Kimi K2 Instruct | 4.68 | -0.73% |

| 7 | DeepSeek V3 | 4.55 | -0.86% |

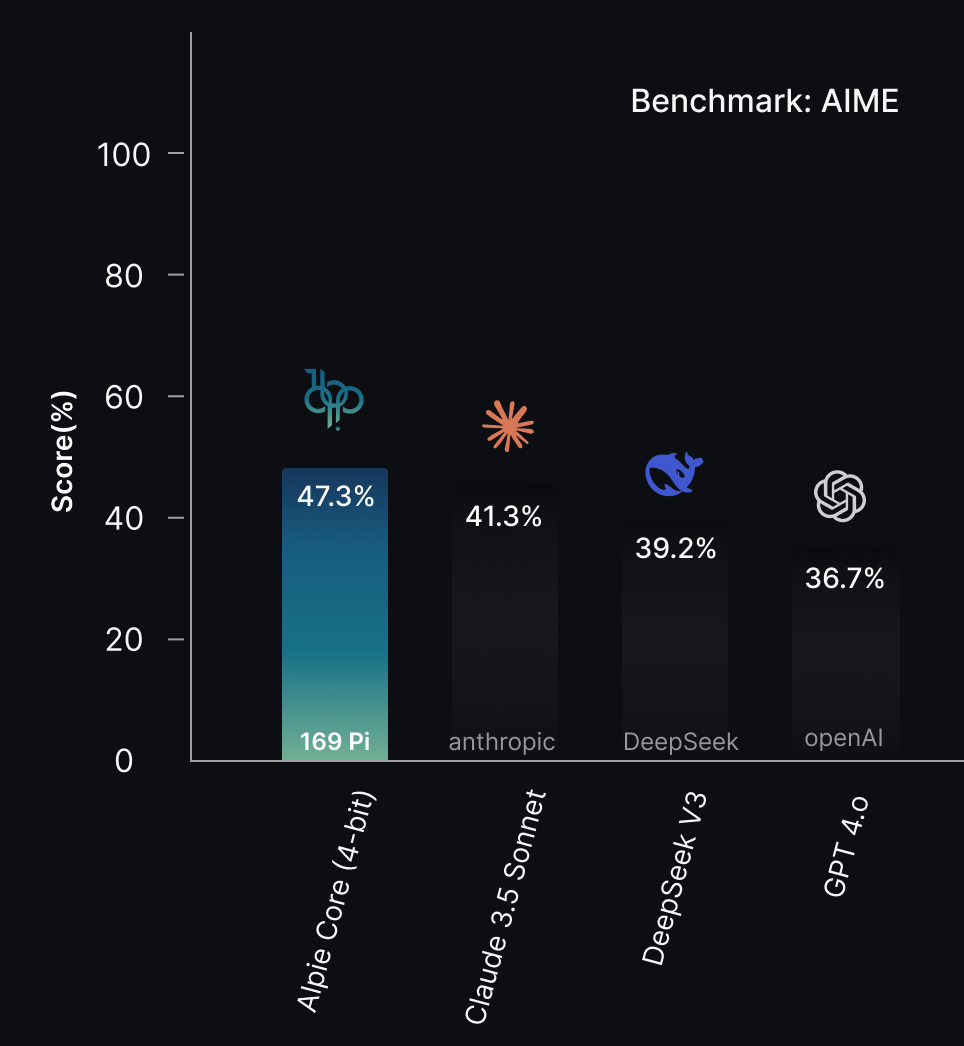

Additional Benchmarks

| Benchmark | Alpie Core | Category |

|---|---|---|

| AIME | 47.34% | Advanced Mathematics |

| GPQA (Diamond) | 40.91% | Graduate-level QA |

| TruthfulQA (MC2) | 60.05% | Truthfulness |

| HellaSwag | 84.66% | Commonsense |

| PIQA | 83.24% | Physical Reasoning |

| ARC Challenge | 67.58% | Science QA |

| CommonSenseQA | 87.06% | Commonsense |

| AGIEval | 64.98% | General Intelligence |

| Winogrande | 79.53% | Commonsense Reasoning |

| MATH-500 | 70.00% | Advanced Mathematics |

Training Details

- Hardware: 8× NVIDIA H100-80GB GPUs

- Fine-tuning Method: LoRA/QLoRA

- LoRA Alpha: 16

- LoRA Dropout: 0.05

- LoRA Rank: 16

- Quantization: 4-bit NF4 + Double Quantization + FP16 compute

- Dataset Domains: Mathematics, coding, reasoning, science, competitive exams, Indian context + law, multilingual (Hindi/Hinglish)

- Synthetic Data Advantage: +15-20% performance boost in STEM & coding

- Training Strategy: Multi-stage distillation → SFT → safety alignment

- Total Training Time: 408 hours

Environmental Impact

We estimated the carbon footprint of training Alpie Core on 8× NVIDIA H100-80GB GPUs:

Formula: CO₂e (kg) = Grid CO₂ Factor × Runtime × Power per GPU × Number of GPUs

Training Parameters:

- Grid CO₂ Factor (Azure): 0.364 kg CO₂e/kWh

- Runtime: 408 hours

- GPUs: 8× H100-80GB

Results:

- Realistic mode (250W avg per GPU): ~298 kg CO₂e

- Conservative mode (700W TDP per GPU): ~835 kg CO₂e

This makes Alpie Core one of the most carbon-efficient reasoning models released to date.

Use Cases

Best for STEM, complex mathematical reasoning, coding, and Indian context

- STEM Education: Advanced problem-solving in science, technology, engineering, mathematics

- Mathematical Reasoning: Multi-step logical and quantitative reasoning

- Software Development: Code generation, debugging, algorithmic problem-solving

- Indian Context: Competitive exam assistance (JEE, NEET, UPSC), Hindi/Hinglish support

- Research & Legal: 65K context for academic papers, legal documents, long-form analysis

Safety and Limitations

Enhanced Content Access

Unlike the base DeepSeek model, Alpie Core provides factual, balanced responses to geopolitically sensitive questions, offering global accessibility on topics like Taiwan's status, Arunachal Pradesh sovereignty, and other sensitive issues.

Current Limitations

- Multilingual reasoning in Hindi/Hinglish shows room for improvement

- Fixed knowledge cutoff without real-time information retrieval

- Occasional struggles with complex multi-hop mathematical reasoning

- Potential hallucinations in factual question-answering

- Should not be used for medical/legal advice without expert oversight

Mitigations

- Safety classifiers and output filtering systems

- Model-assisted safety pipeline using RLHF

- Comprehensive adversarial testing by domain experts

Python SDK Quick Start

# Install

pip install pi169

# Set API key

export ALPIE_API_KEY="your_key_here"

# CLI usage

pi169 "Explain 4-bit quantization"

SDK Features

- CLI Integration for quick interactions

- Streaming & Non-Streaming completions

- Async/Await Support for concurrent requests

- Type-safe Interface with dataclasses

- Robust Error Handling

- OpenAI-Compatible: Drop-in replacement

Full SDK documentation on PyPI

Advanced Usage Examples

Streaming Inference with Transformers

from transformers import AutoModelForCausalLM, AutoTokenizer, TextStreamer

from peft import PeftModel, PeftConfig

import torch

peft_model_id = "169Pi/Alpie-Core"

config = PeftConfig.from_pretrained(peft_model_id)

base_model = AutoModelForCausalLM.from_pretrained(

config.base_model_name_or_path,

torch_dtype=torch.float16,

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model = PeftModel.from_pretrained(base_model, peft_model_id)

model.eval()

streamer = TextStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True)

prompt = "Explain the P vs NP problem"

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

print("Streaming Response:")

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens=1000,

streamer=streamer,

do_sample=True,

temperature=0.7,

top_p=0.9

)

Deployment Options

- Transformers: Python, PyTorch integration

- vLLM: High-throughput inference server

- Ollama: Easy local deployment (20GB model size)

- 169Pi API: Production-ready hosted inference

Citation

@misc{169pi2025alpiecore,

title = {Alpie-Core: A 4-Bit Quantized Reasoning Model from India that Outperforms Full-Precision Models},

author = {169Pi AI},

year = {2025},

url = {https://huggingface.co/169Pi/Alpie-Core}

}

Community & Contributions

Released under Apache 2.0 - we welcome the community to build, extend, and improve!

- Issues & Discussions: Report bugs or suggest features on Hugging Face

- Contributions: Pull requests welcome for improvements

- Share Results: Post your fine-tuning experiments and benchmarks

- Collaborate: Join us in shaping the future of efficient AI

License

Apache 2.0 License – Permissive for research and commercial use

Acknowledgements

Thanks to DeepSeek for the original model foundation. We also acknowledge:

- Hugging Face ecosystem (Transformers, PEFT, vLLM, bitsandbytes)

- Open-source datasets (MMLU, GSM8K, SWE-Bench, etc.)

- Cloud infrastructure providers

- The broader AI research community

Contact

Technical Support: [email protected]

Alpie Core represents a milestone for open-source AI from India, demonstrating that 4-bit reasoning models can rival frontier-scale systems. We hope this release empowers developers, researchers, and organizations worldwide to build more efficient, inclusive, and impactful AI.

Get started today with 5 million free tokens at 169pi.ai

- Downloads last month

- 99

Model tree for 169Pi/Alpie-Core

Base model

deepseek-ai/DeepSeek-R1-Distill-Qwen-32B